This is part of Audio Geometry Explorations and one of three of this series (see also Neon Interpolation and Auto Interpolation) I used Google's Performance RNN to generate the music data which drives the animation. Performance RNN is machine learning piano model or "an LSTM-based recurrent neural network designed to model polyphonic music with expressive timing and dynamics". You can listen to it here and if you use Chrome you can output the MIDI data.

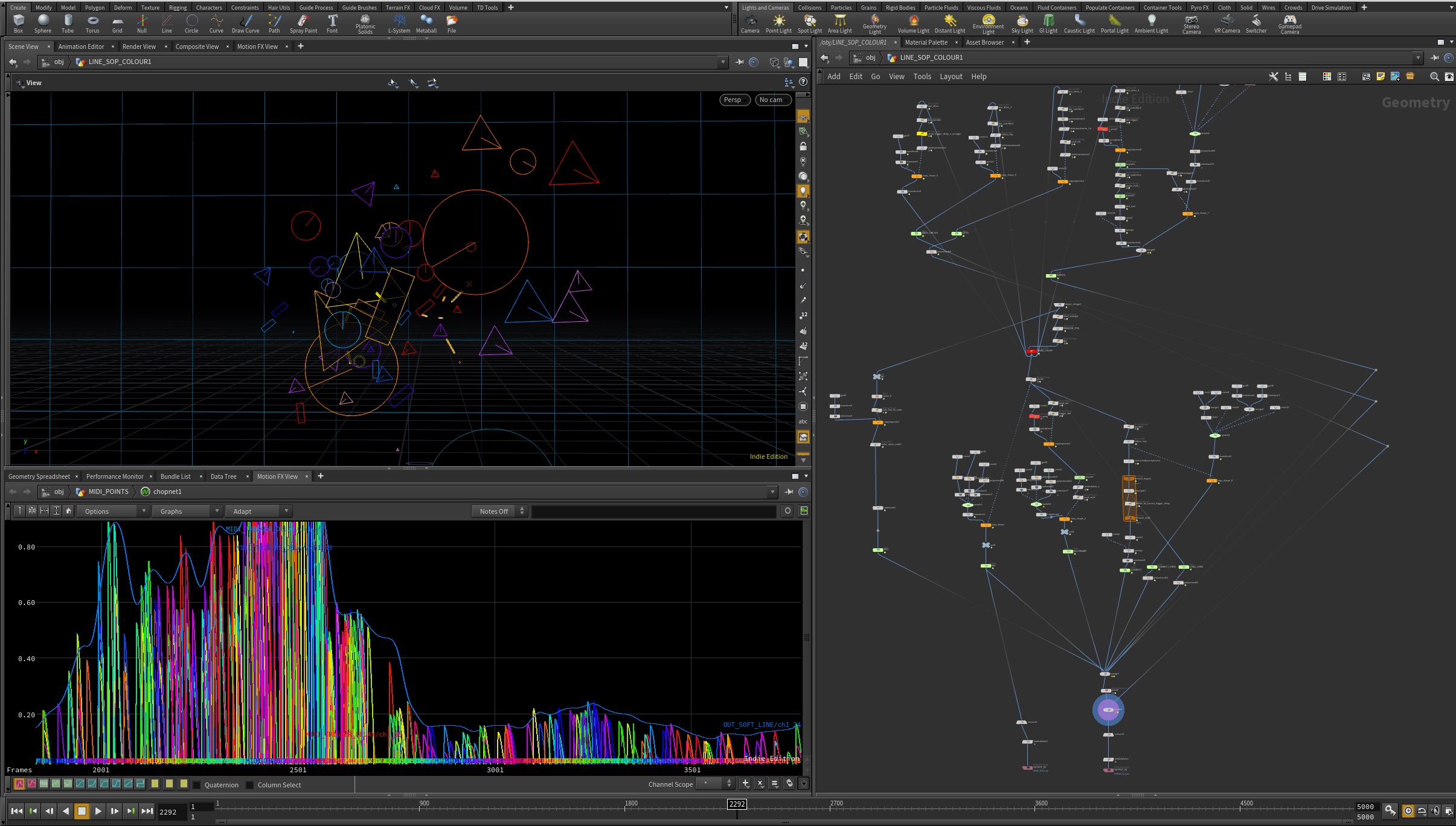

I used Houdini to read the MIDI data then create the animation. It's entirely procedural so uses no keyframes. All I have to do to create a new animation is drop in a new MIDI file. The animation set up I created fairly quickly, on a train journey from Devon to London and back.

I used Houdini to read the MIDI data then create the animation. It's entirely procedural so uses no keyframes. All I have to do to create a new animation is drop in a new MIDI file. The animation set up I created fairly quickly, on a train journey from Devon to London and back.

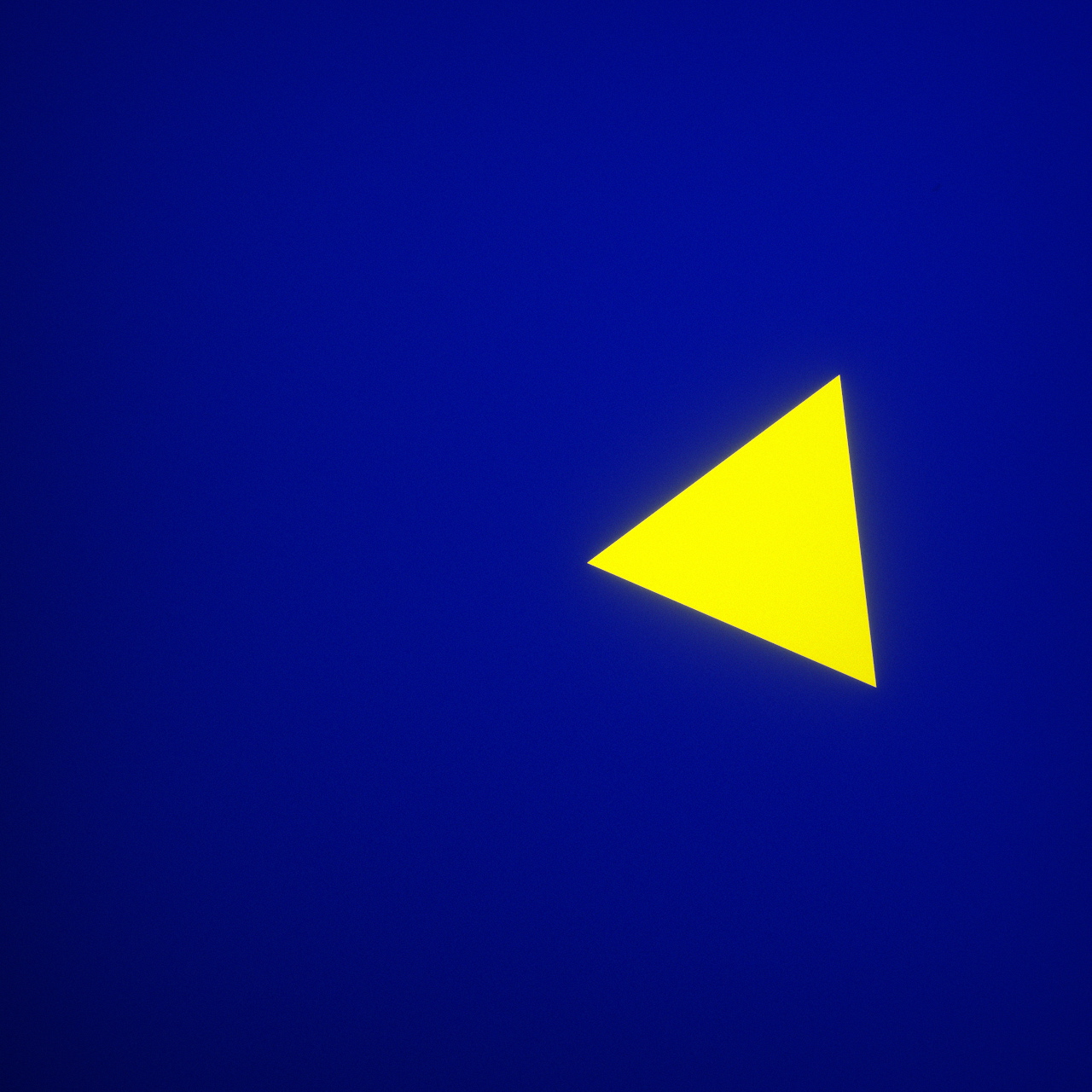

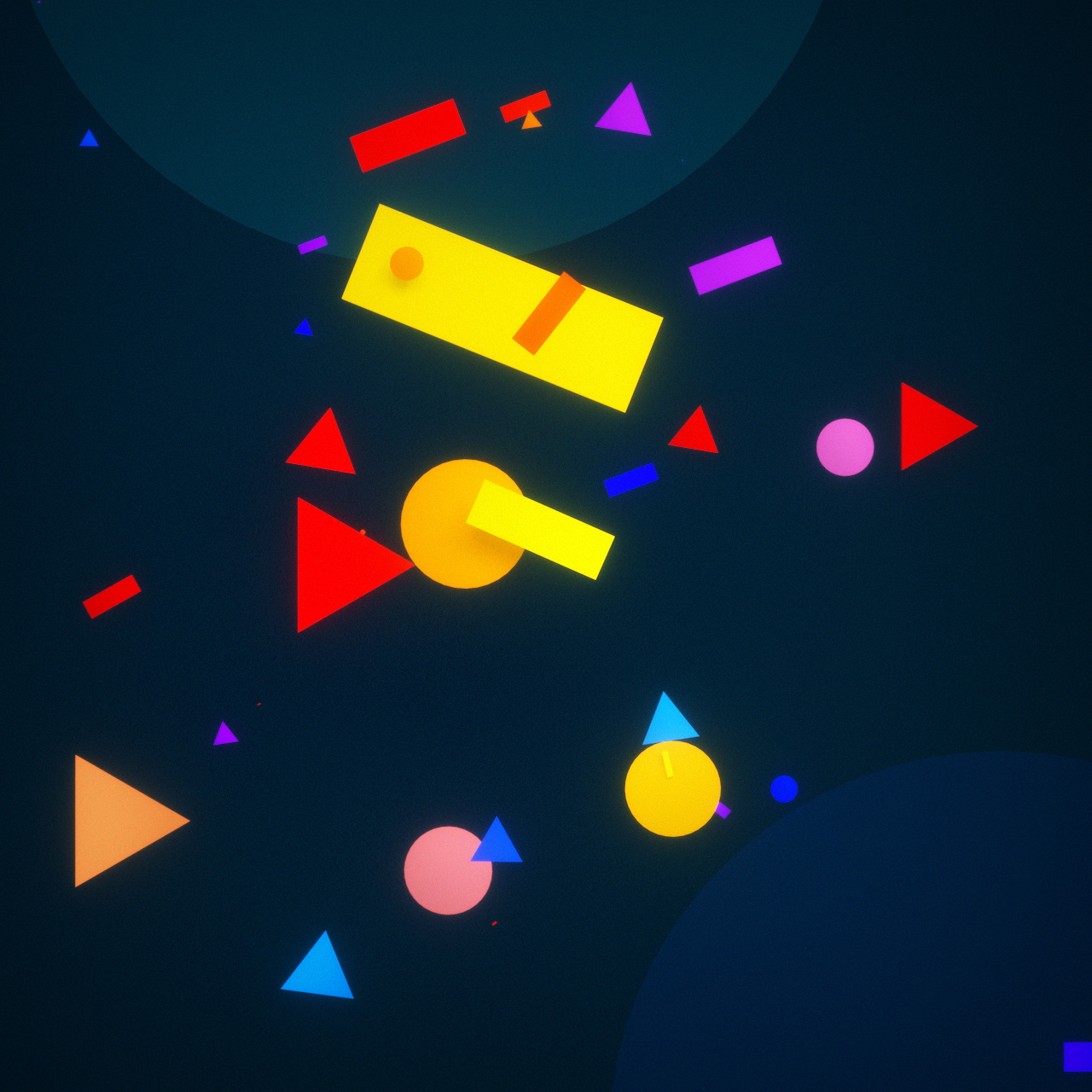

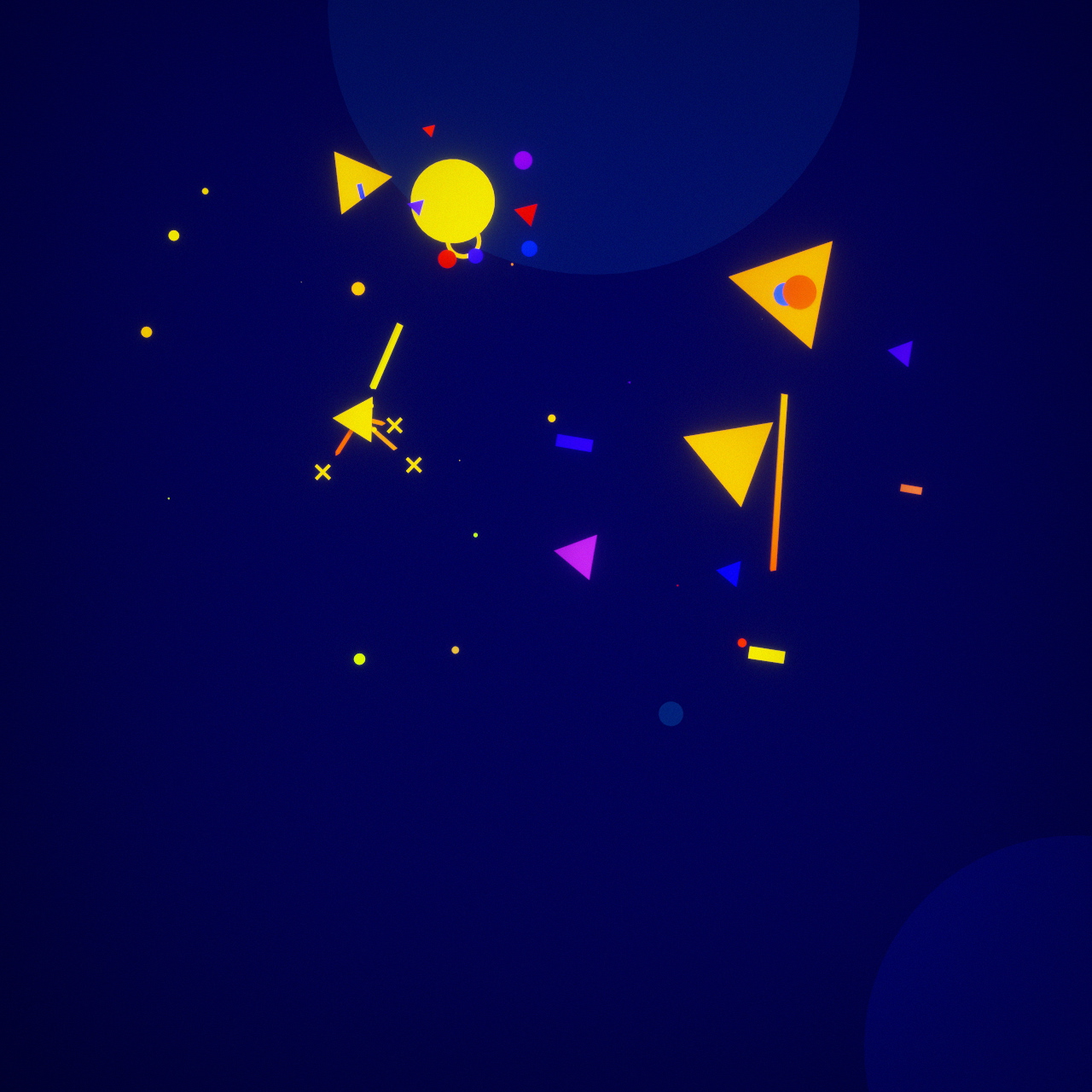

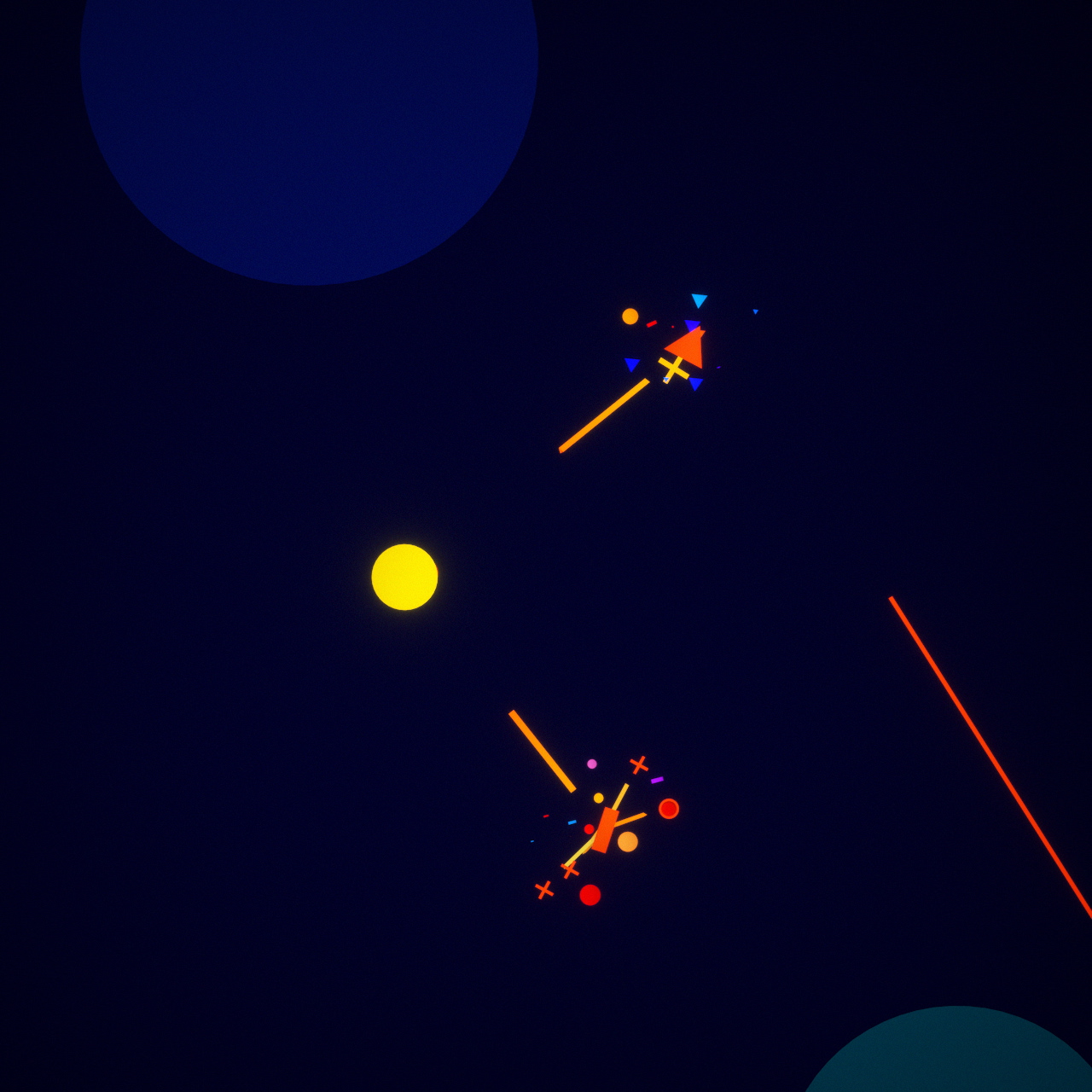

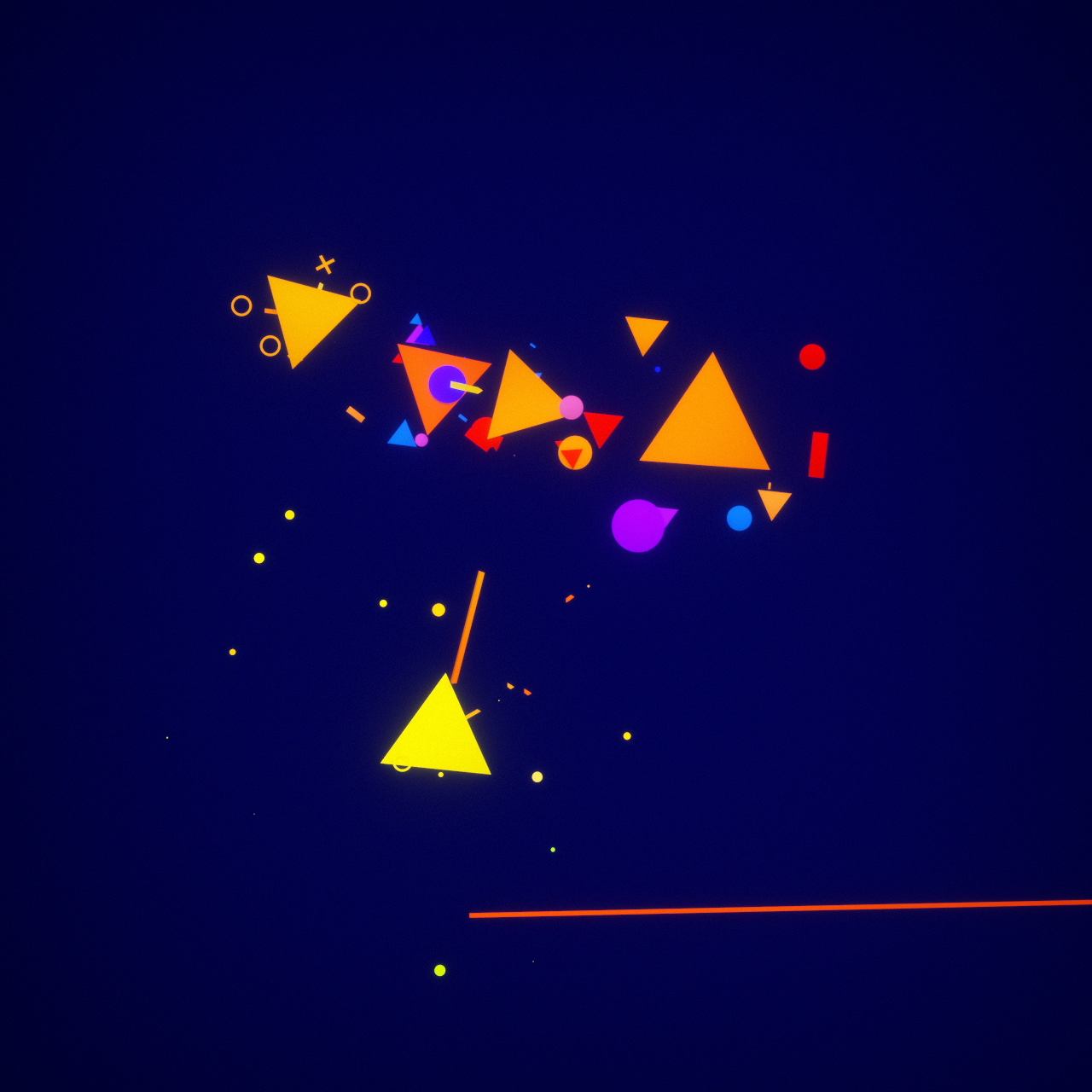

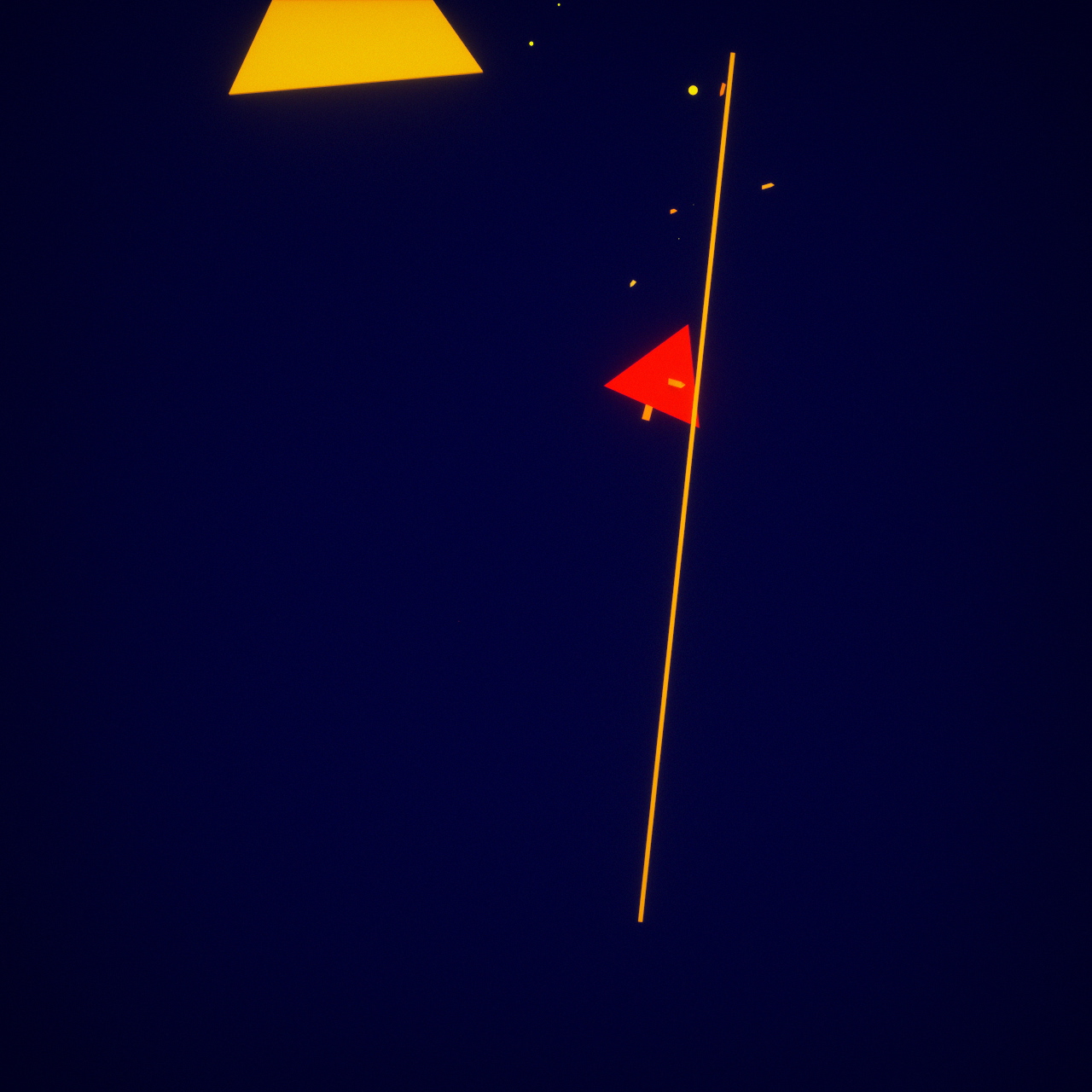

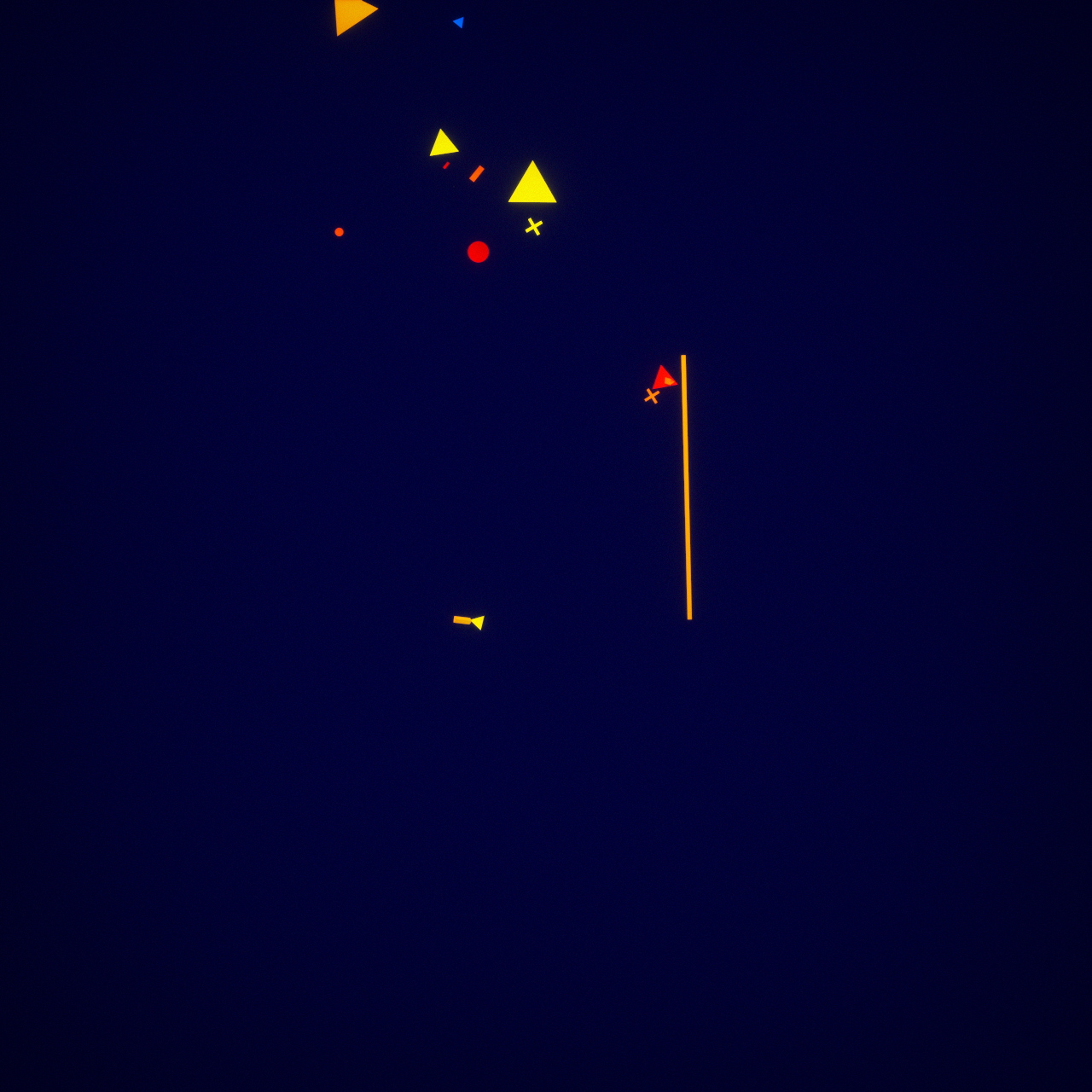

I enjoy the results partly because I just don't know what to expect. I find it interesting just to look at the stills. I love working in colour, it's refreshing, almost therapeutic especially as a lot of my stuff is fairly clinical and muted. I was also inspired by Manoloide and his beautiful use of colour in generative artwork.

Using machine learning music is interesting because I could fairly easily create an almost endless piece of animation and I don't even have to worry about licensing the music (I don't think!). I fairly randomly chose this section of music because I had actually recorded so much data that I was beginning to get lost in it a bit. I simply choose a section intuitively rather than being too analytical.

A thanks to Alex Eckford for helping with the audio

Using machine learning music is interesting because I could fairly easily create an almost endless piece of animation and I don't even have to worry about licensing the music (I don't think!). I fairly randomly chose this section of music because I had actually recorded so much data that I was beginning to get lost in it a bit. I simply choose a section intuitively rather than being too analytical.

A thanks to Alex Eckford for helping with the audio