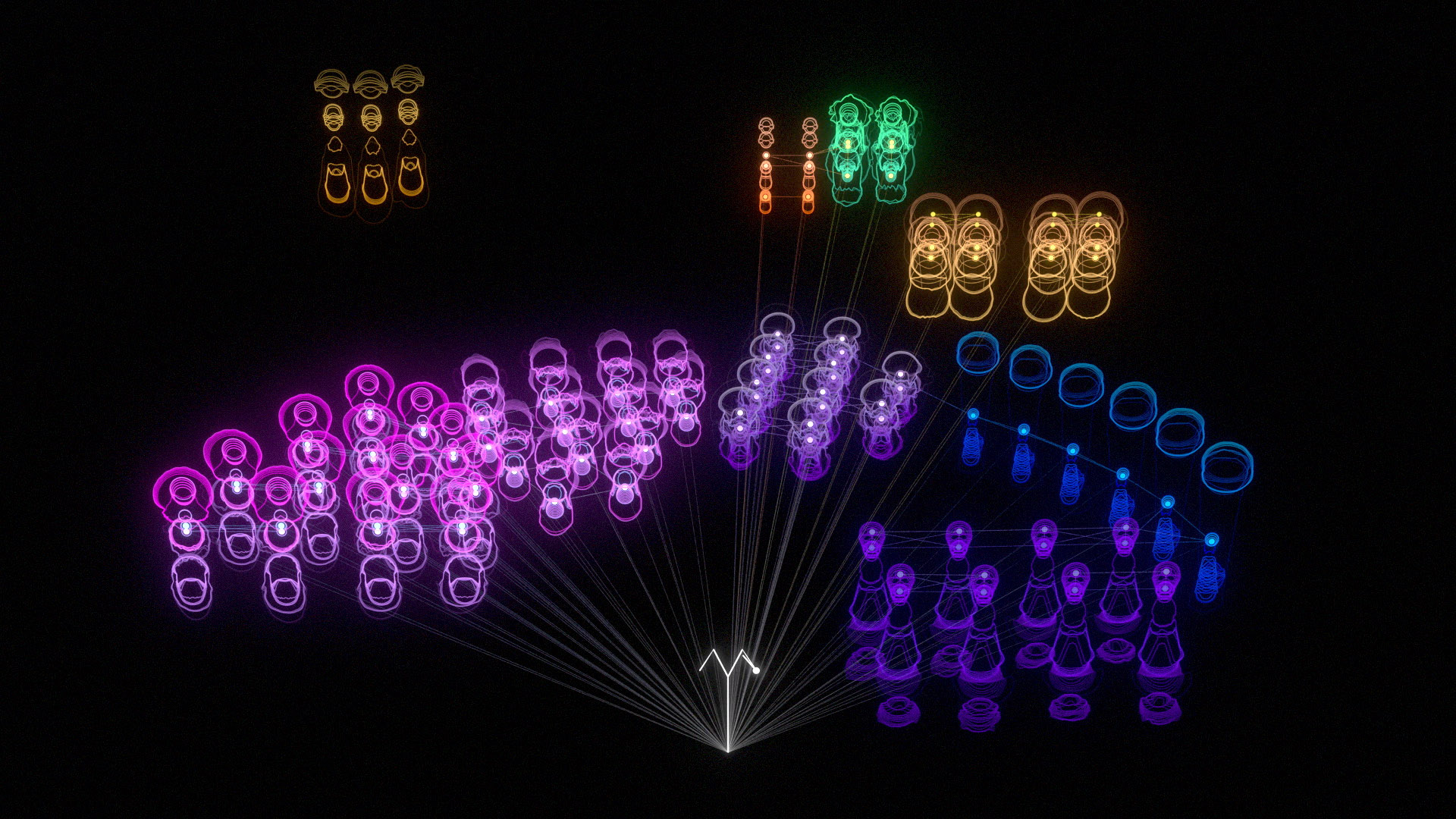

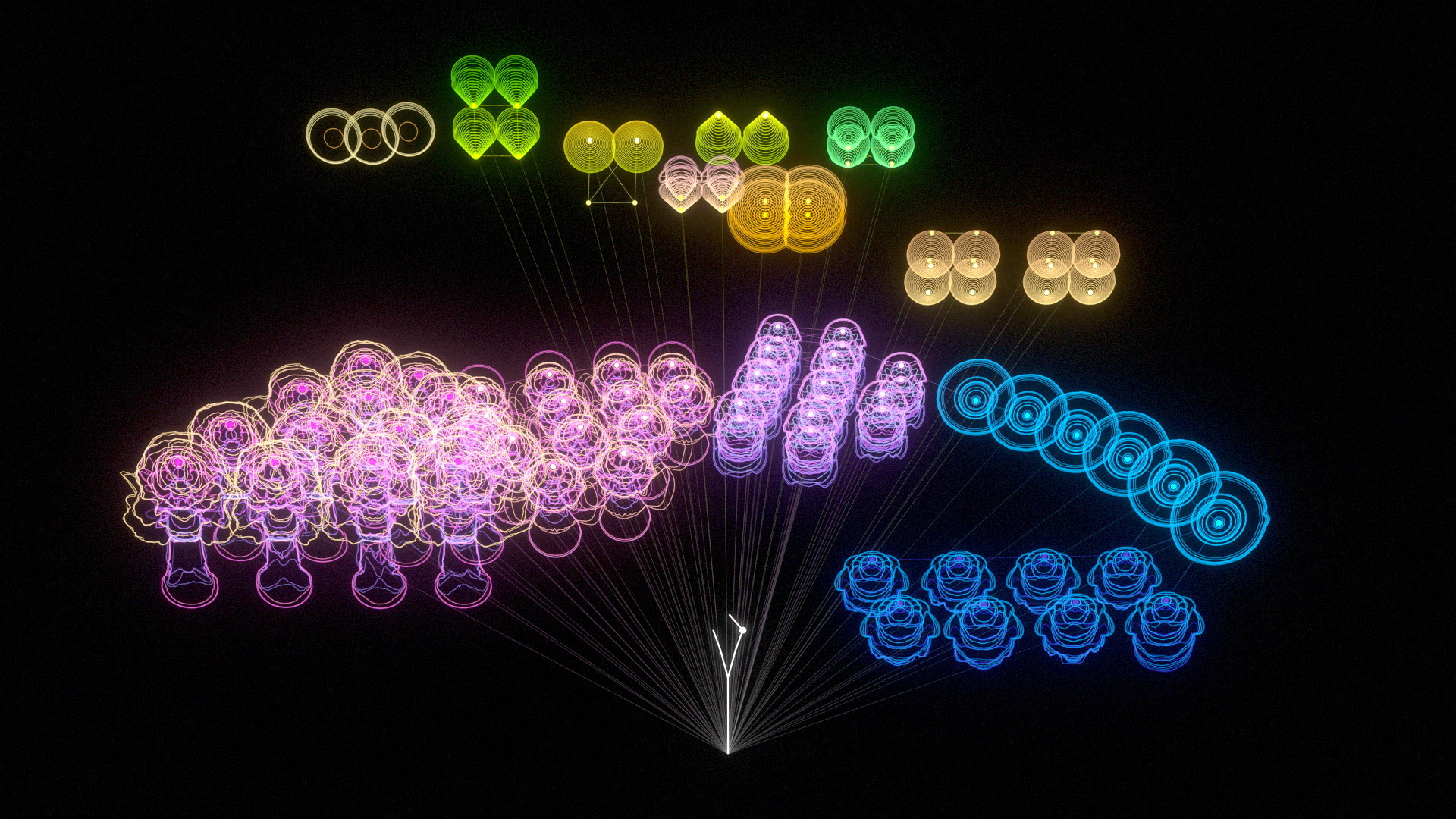

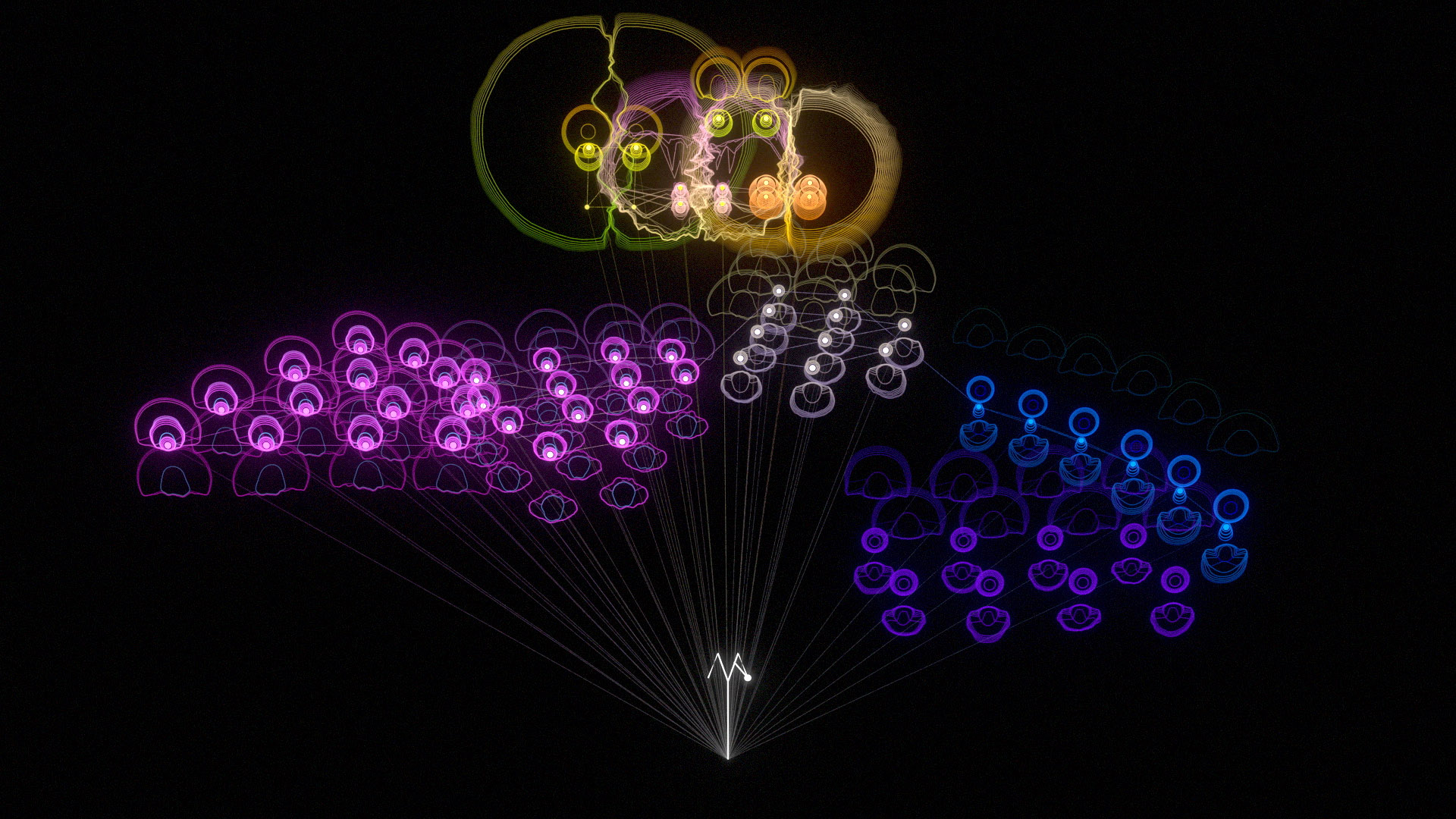

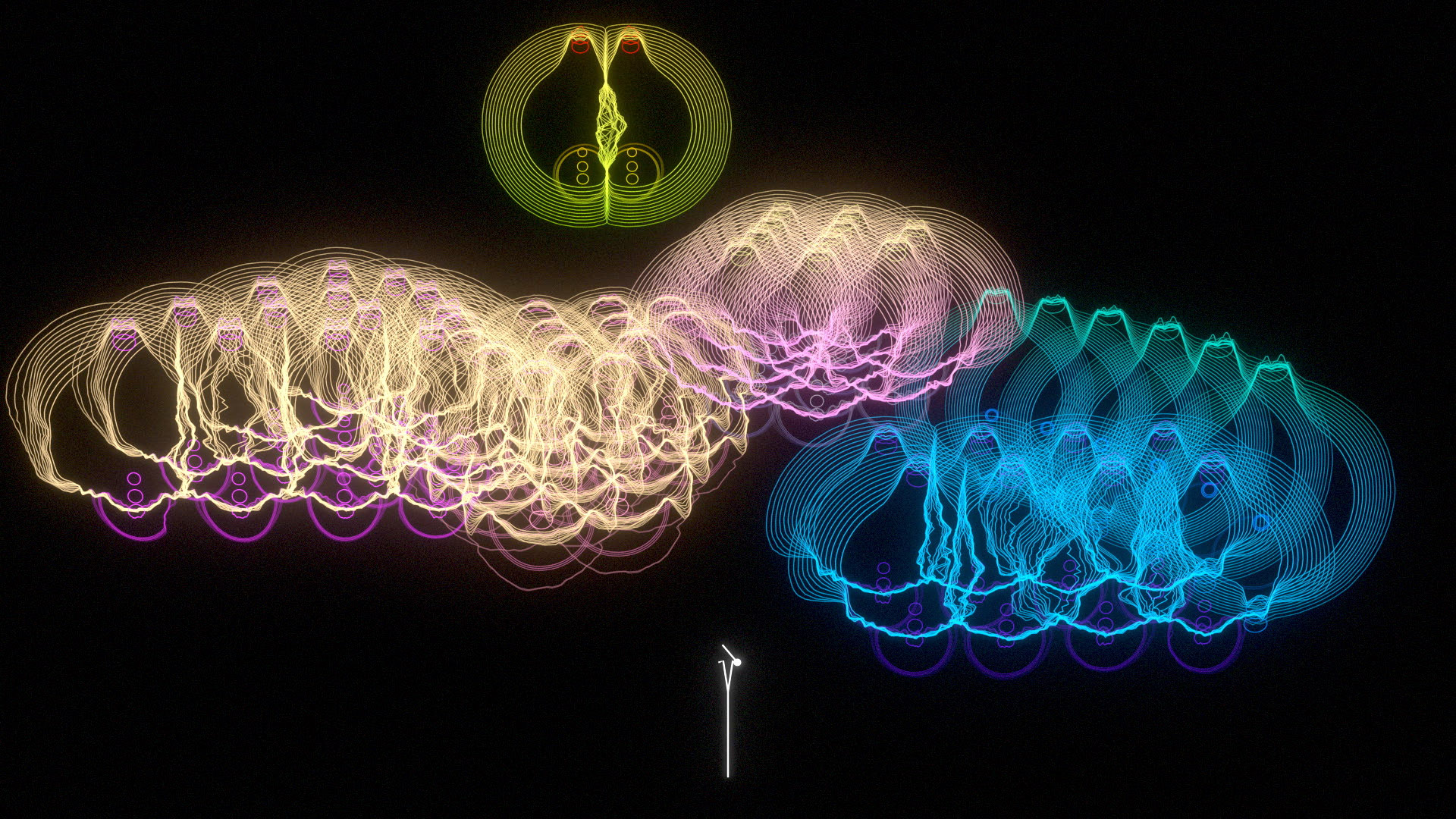

This is part of a self-initiated series of exploring the combination of the audio and visual.

It was quite a fun one because I plugged in the data, hit render and then didn't really know what would come out. Usually I'd hand animate every last detail but this one is essentially generative. This one was used in a TEDx talk on a large Hologauze screen (see below)

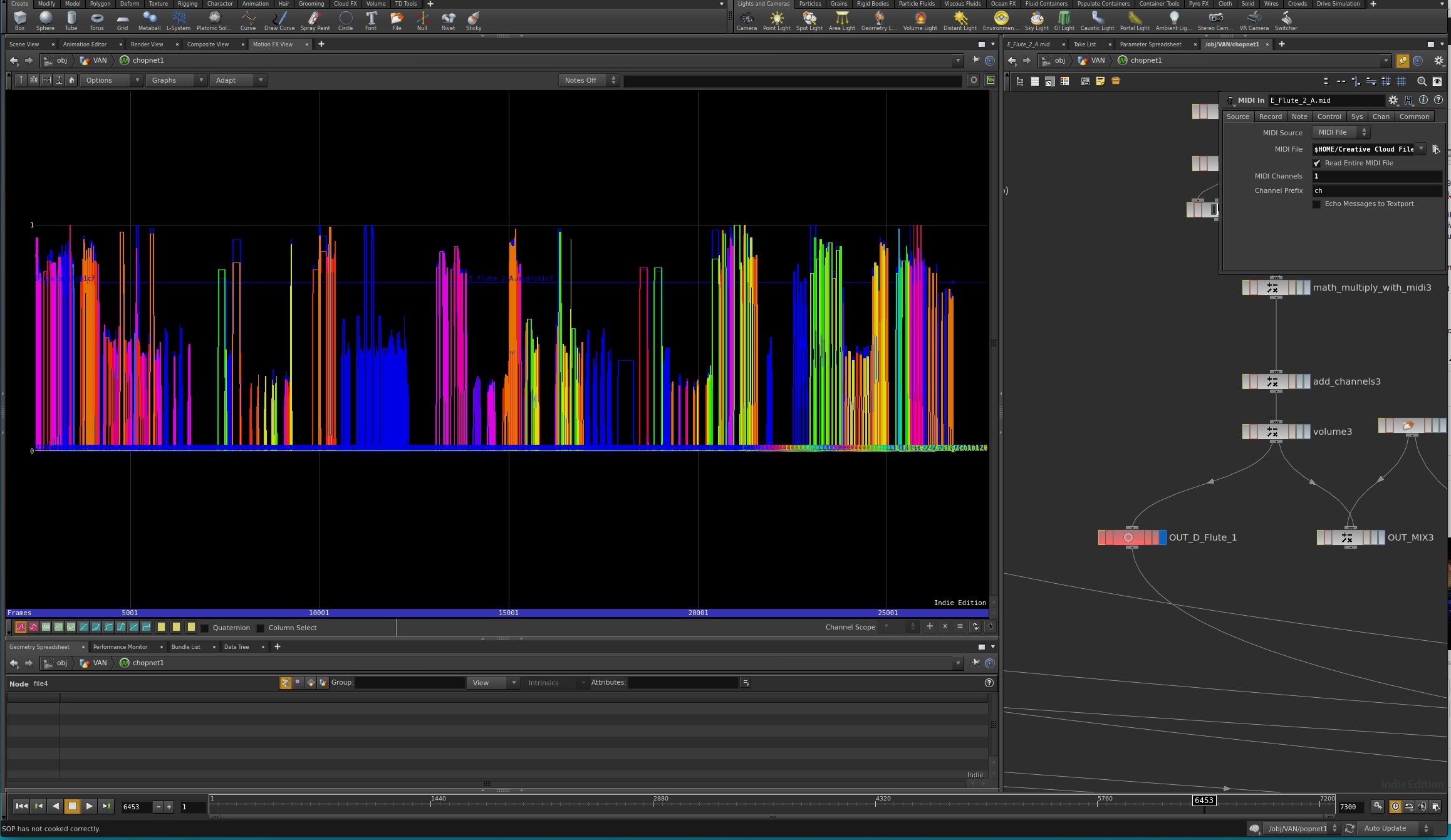

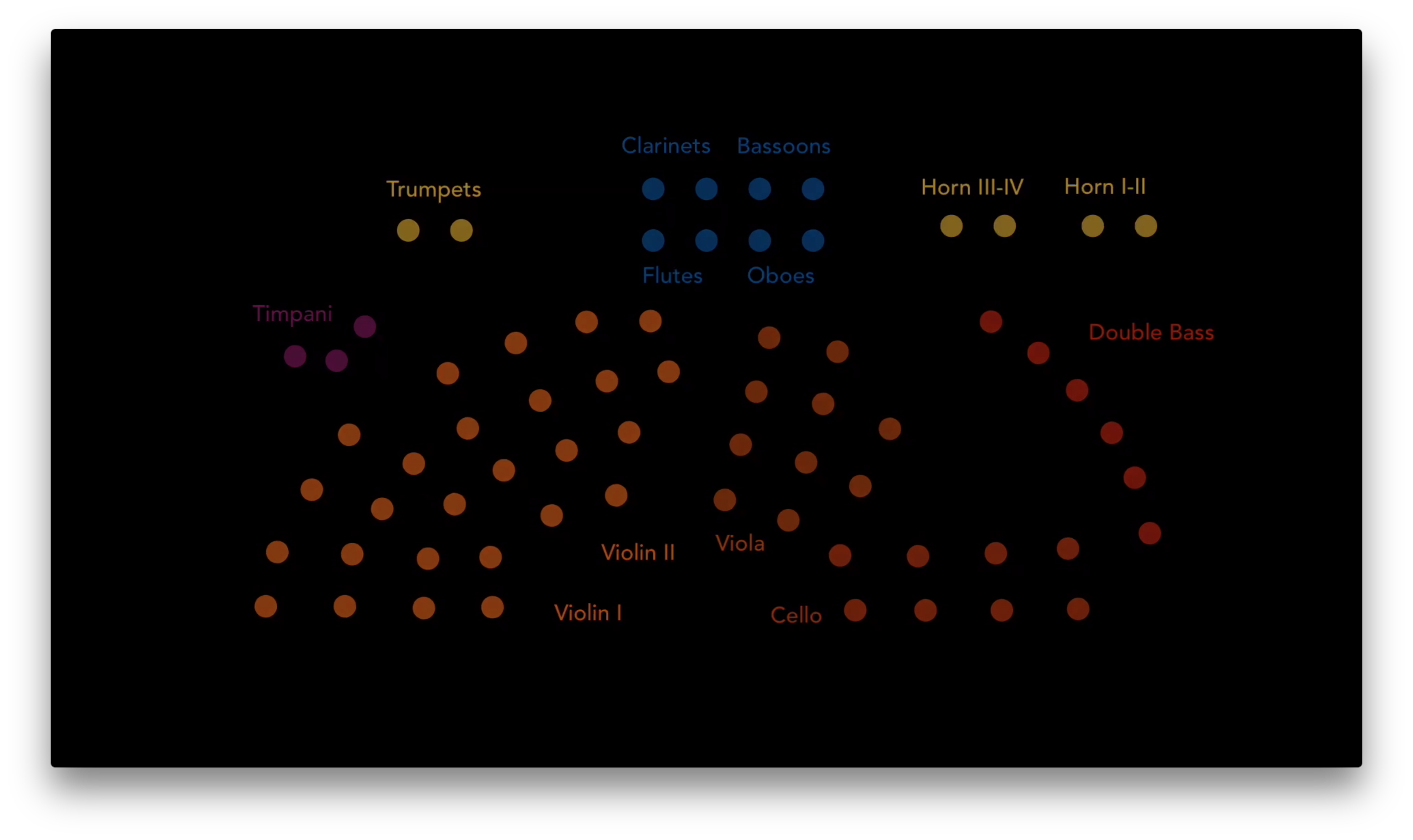

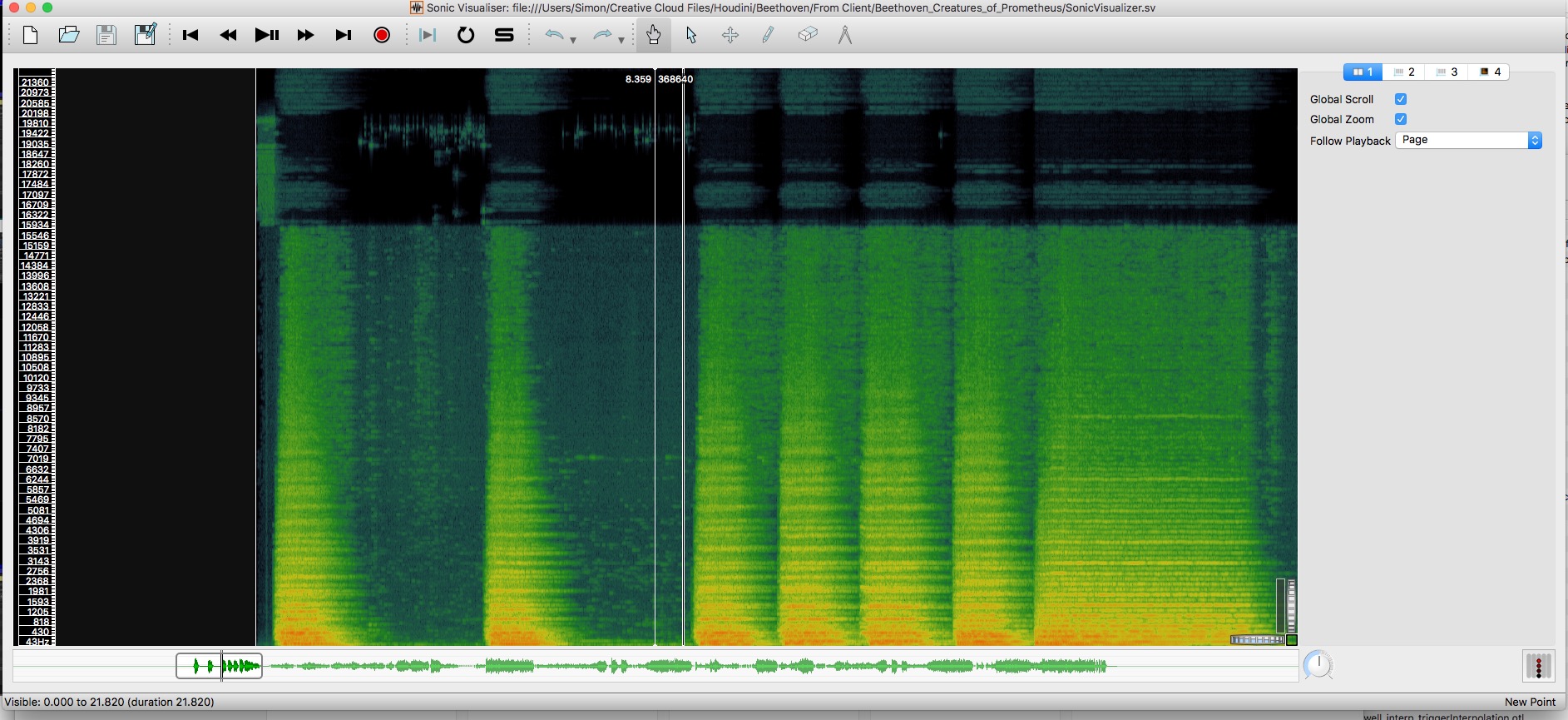

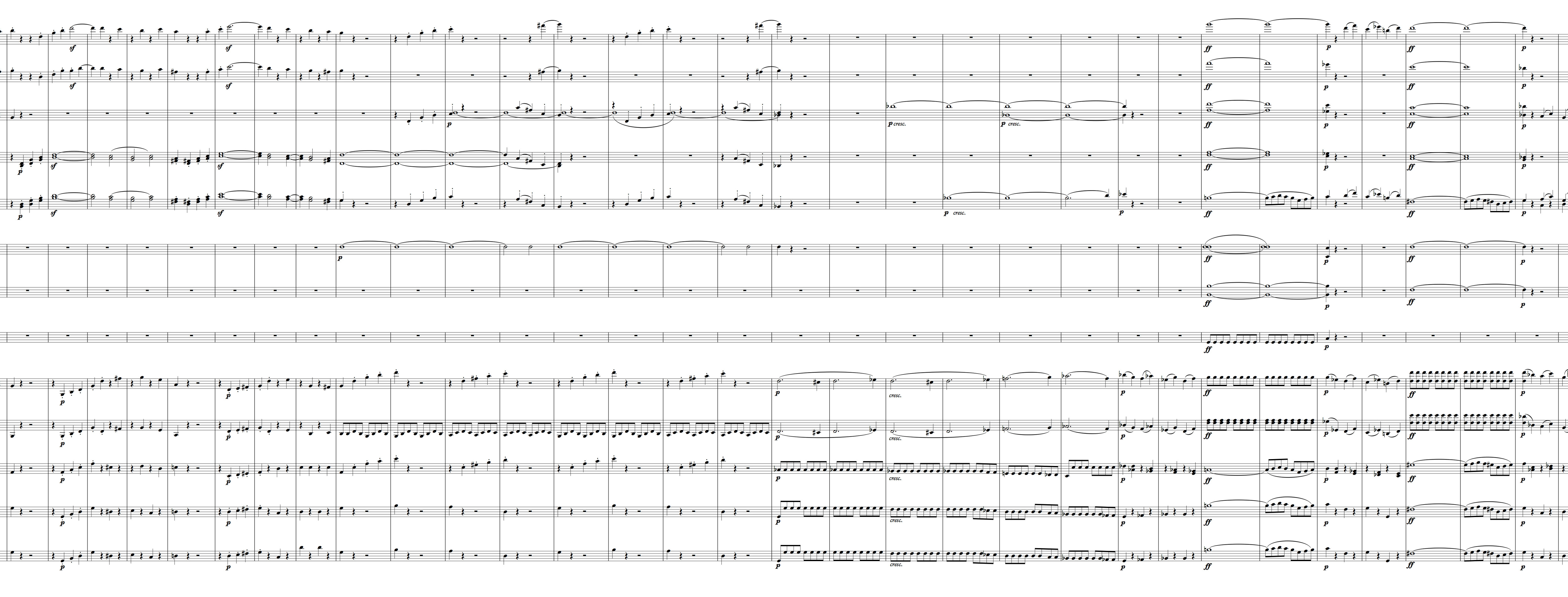

The animation is driven mainly through a MIDI file. The Houdini setup reads the notation and emits particles using the pitch to derive their height and amplitude to derive their speed. As the

It was quite a fun one because I plugged in the data, hit render and then didn't really know what would come out. Usually I'd hand animate every last detail but this one is essentially generative. This one was used in a TEDx talk on a large Hologauze screen (see below)

The animation is driven mainly through a MIDI file. The Houdini setup reads the notation and emits particles using the pitch to derive their height and amplitude to derive their speed. As the

volume of each note increases it also effects the colour emitted. The setup also reads the previous note and determines if the current note is higher or lower and pitches the particles up or down respectively.

Many thanks to Alex Eckford for creating music from the MIDI, to Greg Felton and Alan Martyn at Amphio for the data and the help.

https://simonfarussell.com

http://www.alexeckford.com

http://amphio.co

Many thanks to Alex Eckford for creating music from the MIDI, to Greg Felton and Alan Martyn at Amphio for the data and the help.

https://simonfarussell.com

http://www.alexeckford.com

http://amphio.co

Stills Slideshow